Me: Where are you?

Prospective client: Massachusetts

Me: I’m 3,000 miles away! Call 911!

Prospective client: No, it’s my website! We lost 50% of our traffic overnight! Help!

Me: How much can you afford to pay?

Okay, that’s not actually how the conversation went, but it might as well have, given the fact that I came in and performed a strategic SEO audit, the client made all the changes I recommended, and, as a result, a year later they’ve increased organic traffic by 334%!

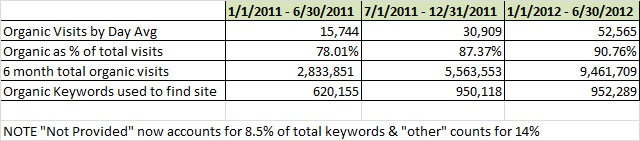

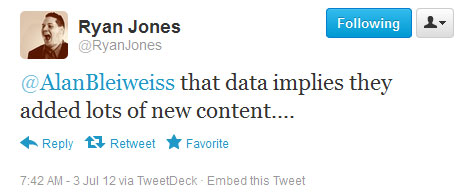

Now, when you look at that data, you might assume several things. Ryan Jones saw those numbers and tweeted just such an assumption:

Rather than leaving people assuming what was done to get such insanely great results, I figured it would make sense to write an actual case study. So once again, I’m going to ask you to indulge my propensity for excruciatingly long articles so that I can provide you with the specific insights gained, the tasking recommended, the changes made, and the lessons learned.

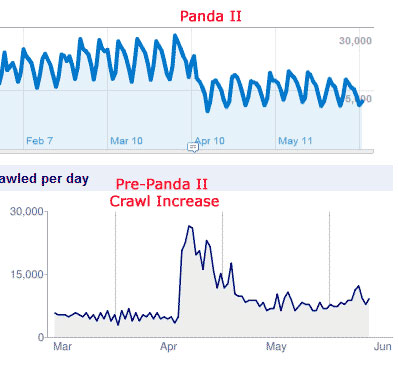

First Task – Look at the Data for Patterns

One of the first things I do when performing a strategic SEO audit is look at the raw data from a 30,000-foot view to see if I can find clues as to the cause of a site’s problems. In this case, I went right into Google Analytics and looked at historic traffic in the timeline view going back to the previous year. That way I could both check to see if there were seasonal factors on this site, and I could compare year-over-year.That’s when I discovered the site was not just hit by Panda. The problem was much more deeply rooted.

The MayDay Precursor

The data you see above is from 2010. THAT major drop was from Google’s MayDay update. As you can see, daily organic visits plummeted as a result of the MayDay update. That’s something I see in about 40% of the Panda audits I’ve done.

From there, I then jumped to the current year and noticed that, in this case, MayDay was a precursor to Panda. Big time.

What’s interesting to note from this view is the site wasn’t caught up in the original Panda. The hit came the day of Panda 2.0.

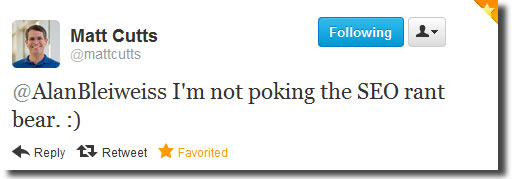

Okay, let me rephrase that. Both of my claims about the site being hit so far, MayDay and Panda 2.0, are ASSUMPTIONS. Because we work in a correlation industry and since Matt Cutts refuses to poke this SEO bear, I doubt he’ll ever give me a personal all-access pass to his team’s inner project calendar.

Correlation-Based Assumptions

Since we don’t have the ability to know exactly what causes things in an SEO world, we can only proceed based on what Google chooses to share with us. This means it’s important to pay attention when they do share, even if you think they’re being misleading. More often than not, I have personally found that they really are forthcoming with many things.That’s why I rely heavily on the work of the good Doctor when it comes to keeping me informed because I can’t stay focused on Google forums info. I’m talking about the work Dr. Pete has been doing to keep the SEOMoz Google Update Timeline current.

An Invaluable Correlation Resource

The SEOmoz update timeline comes into play every time I see any significant drop in a site’s organic traffic after they call me in to help them. I can go in there and review every major Google change that’s been publicly announced all the way back to 2002. You really need to make use of it if you’re doing SEO audits. As imperfect as correlation work can be, I’ve consistently found direct matches between major site drops and people coming to me for help with entries in that timeline.

The Next Step – Is It Actually a Google-Isolated Issue?

Once I see a big drop in the organic traffic for a site, I dig deeper

to see if it’s actually a Google hit or if it might be all search

engines or even a site-wide issue. Believe me, this is a CRITICAL step.

I’ve found some sites that “appeared” to be hit by search engine issues,

but it turned out that the site’s server hit a huge bottleneck. Only by

looking at ALL traffic vs. Organic Search vs. Google specific will you

be able to narrow down and confirm the source.In this case, back during the Panda period, organic search accounted for close to 80% of site-wide traffic totals. Direct and Referrer traffic showed no dramatic drop over the same time period, nor did traffic from other search sources.

Curiosity Insight – Google Probes Before Big Hits

Not always, but once in a while when Google’s about to make a major algorithm change, you’ll be able to see (retroactively in hindsight) that they turned up the crawl volume in big ways.

The SEO Audit – Process

Here’s where the rubber meets the road in our industry. You can wildly guess all you want about what a site needs to do to improve. You can just throw the book at a site in terms of “the usual suspects.” You can spend a few minutes looking at the site and think, “I’ve got this.” Or you can spend hundreds of hours scouring thousands of pages and reviewing reams of spreadsheet reports and dashboards, all to come up with a plan of action.Personally, I adopt the philosophy that a happy middle ground exists, one where you look at just enough data, examine just enough pages on a site, and spend just enough time at the code level to find those unnatural patterns I care so much about because it’s the unnatural patterns that matter. If you’ve examined two category pages, you’re almost certainly going to find that the same fundamental problems that are common to those two pages will exist across most or all of the other category pages. If you look at three or four product pages (randomly selected), any problems that are common to most or all of those pages will likely exist on all of the thousands or tens of thousands of product pages.

So that’s what I do. I scan a sampling of pages. I scan a sampling of code. I scan a sampling of inbound link profile data and social signal data.

Comprehensive Sampling

Don’t get me wrong. I don’t just look at a handful of things. My strategic audits really are comprehensive in that I look at every aspect of a site’s SEO footprint. It’s just that at the strategic level, when you examine every aspect of that footprint, I’ve personally found there’s not enough of a significant gain when performing more than a sampling review of those factors to justify the time spent and the cost to clients. The results that come when you implement one of my audit action plans has proven over the last several years to be quite significant.Findings and Recommendations

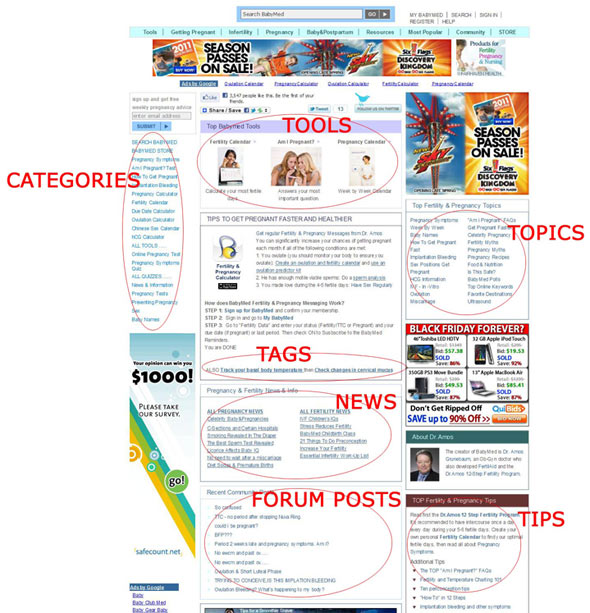

For this particular site, here’s what I found and what I ended up recommending.The site’s Information Architecture (IA) was a mess. It had an average of more than 400 links on every page, content was severely thin across most of the site, and the User Experience (UX) was the definition of confused.

That’s why click-through data is so vital at this stage of an audit. Back when I did this, Google didn’t yet have their on-page Analytics to show click-through rates, and I didn’t want to take the time to get something like Crazy-Egg in place for heat maps. Heck, I didn’t NEED heat maps. I had my ability to put myself in the common user’s shoes. That’s all I needed to do to know this site was a mess.

And the problems on the homepage were repeated on the main category pages, as well.

Topical Confusion of Epic Proportions

Note the little box with the red outline in the above screen capture. That’s the main content area for the category page, and all those links all around it are merely massive topical confusion, dilution, and noise. Yeah, and just like the homepage, you’re only looking at the TOP HALF of that category page.Duplicate content was ridiculous. At the time of the audit, there were more than 16,000 pages on the site and thousands of “ghost” pages generated by very poor Information Architecture, yet Google was indexing only about 13,000. Article tags were automatically generating countless “node” pages, and duplication along with all the other issues completely confused Google’s ability to “let us figure it out.”

Site Speed Issues

One of the standard checks I do when conducting an audit is run a sampling of page URLs through Pingdom and URIValet to check on the speed factor. Nowadays I also cross reference that data with page level speed data provided right in Google Analytics, an invaluable three-way confirmation check. In the case of this site, there were some very serious speed problems. We’re talking about some pages taking 30 seconds or more to process.Whenever there are serious site speed issues, I look at Pingdom’s Page Analysis tab. It shows the process time breakdown, times by content type, times by domain (critical in today’s cloud-driven content delivery world), size by content type, and size by domain. Cross reference that to URIValet’s content element info, and you can quickly determine the most likely cause of your biggest problems.

When Advertising Costs You More Than You Earn from It

In the case of this site, they were running ads from four different ad networks. Banner ads, text link ads, and in-text link ads were on every page of the site, often with multiple instances of each. And the main problem? Yeah, there was a conflict at the server level processing all those different ad block scripts. It was killing the server.Topical Grouping Was a Mess

Another major problem I found while performing the audit was not only was there a lot of thin content and a lot of “perceived” thin content (due to site-wide and sectional common elements overcrowding the main page content), but the content was also poorly organized. Links in the main navigation bar were going to content that was totally not relevant to the primary intent of the site. Within each main section, too many links were pointing to other sections, and there was no way search engines could truly validate that “this section is really about this specific topical umbrella.”Topical Depth Was Non-Existent

Even though the site had tens of thousands of pages of content, keyword phrases had been only half-heartedly determined, assigned, and referenced. Match that factor to the over-saturation of what was ultimately links to only slightly related other content, a lack of depth in the pure text content on category and sub-category pages, sectional and site-wide repetition of headers, navs, sidebars, callout boxes, and footer content, and the end result was that search engines couldn’t really grasp that there really was a lot of depth to each of the site’s primary topics and sub-topics.Inbound Link Profile

A review of the inbound link profile showed a fairly healthy mix of existing inbound links; however, there was a great deal of weakness in volume specific to a diversity of links using anchor text variations, especially when comparing the site’s profile to that of top competitors.Putting Findings Into Action

Another critical aspect of my audit work is taking those findings and putting them together in a prioritized action plan document. Centric to this notion is knowing what to include and what to leave out. For a site with this much chaos, I knew there was going to be six months of work just to tackle the biggest priorities.Because of this awareness, the first thing I did was focus my recommendations on site-wide fixes that could be performed at the template level. After all, most sites only have one, two, or a few primary templates that drive the content display.

From there, I leave out what I consider to be “nice to have” tasking, things a site owner or the site team COULD be doing but that would only be a distraction at this point. Those are the things that can be addressed AFTER all the big picture work gets done.

Tasking for This Site

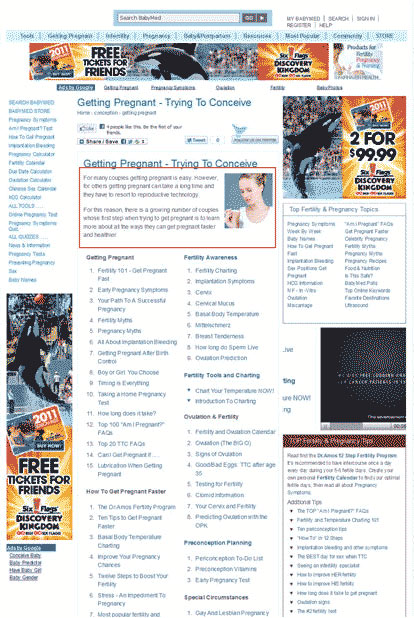

After the rest of my audit review for this site, I came up with the following breakdown of tasking:Improved UX

- Eliminated off-intent links from main nav

- Reordered main nav so most important content nav is to the left

- Reduced links on home page from 450 down to 213

- Rearranged layout on home page and main category pages for better UX

- Removed in-text link ads

- Reduced ad providers from four networks down to two

- Reduced ad blocks from seven down to five on main pages

- Reduced ad blocks on sub-cat and detail pages from nine down to seven

- Eliminated ad blocks from main content area

- Rewrote page titles

- Modified URLs and applied 301s to old version

- Rewrote content across all 100 pages to reflect refined focus

- Reorganized content by category type

- Moved 30% of sub-category pages into more refined categories

- Eliminated “node” page indexing

- Called for establishing a much larger base of inbound links

- Called for greater diversity of link anchors (brand, keyword and generic

- Called for more links pointing to point to deeper content

Here’s a screen capture of the top half of the NEW, IMPROVED home page.

Massive Changes Take Time

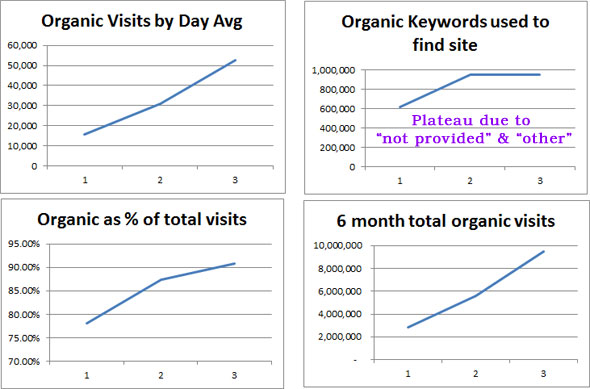

Given how much work had to be done, I knew, as is usually the case with action plans I present to audit clients, that they were in for a long overhaul process that would last several months. I knew they’d start seeing improvements as long as they followed the plan and focused on quality fixes, rather than slap-happy, just-get-it-done methods.Results Are Epic

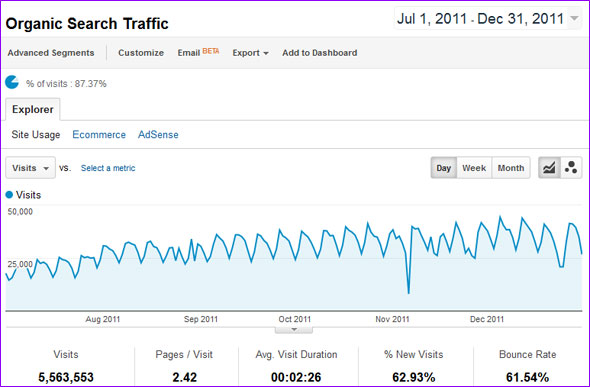

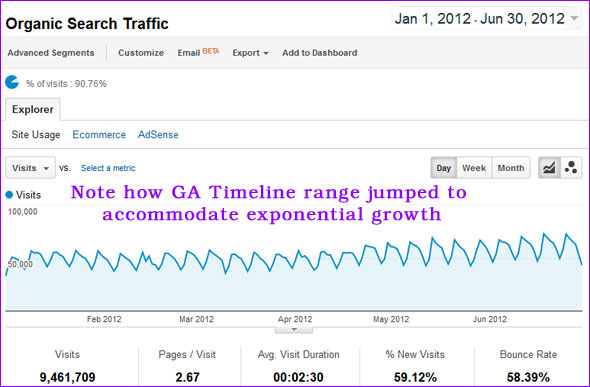

The results that have occurred over the course of the past year have been truly stunning to say the least. I knew the site would see big improvements in organic data. I just had no idea it would be on this scale.

Here are three screen captures of the actual Google Data, just so you can see I’m not making all of this up.

Takeaways

As you might venture to guess, the recovery and subsequent, continued growth has been about as “best case scenario” as you can get. It truly is one of my proudest moments in the industry. The fact that not one single other site had been reported as having yet recovered at the time I performed the audit just means more than words can describe.Sustainable SEO Really Does Matter

The biggest takeaway I can offer from all of this is that sustainable SEO really does matter. Not only has the site rebounded and gone on to triple its organic count, it has sailed through every other major Google update since. What more can you ask for in correlation between the concept of sustainable SEO best practices and end results?

UX – It’s What’s for Breakfast

I drill the concept of UX as an SEO factor into every brain that will listen. I do so consistently, as do many others in the search industry. Hopefully, this case study will allow you to consider more seriously just how helpful understanding UX can be to the audit evaluation process and the resulting gains you can make with a healthier UX.

IA – An SEO Hinge-Pin

With all the IA changes made, Google now indexes close to 95% of the site content, more than 17,000 pages versus the 13,000 previously indexed. Their automated algorithms are much better able to figure out topical relationships, topical intent, related meanings, and more.

Ads as Shiny Objects

This was a classic case study for many reasons, one of which is the reality that a site owner can become blinded to the advertising revenue shiny object. Yet there comes a point where you cross over the line into “too much.” Too many ads, too many ad networks, too many ad blocks, too many ad scripts confusing the server…you get the idea. By making the changes we made, overall site revenue from ads went up proportionally, as well, so don’t let the concept that “more is always better” fool you.

Not Provided Considerations – Long Tail Magic

This observation applies to just about any site, but it’s more of a comfort for medium and large-scale sites with thousands to millions of pages.If you read the first spreadsheet I included here, you’ll note that it appears that the total number of keywords used to discover and click through to the site spiked and then leveled off. However, if you were to look more closely at that data, you’d also observe that the plateau is directly related to both “Not Provided” and “Other,” with “Other” actually accounting for more keyword data being in one bucket than “Not Provided” (approximately 13% vs. 8%).

The more critical concept here is even though you may in fact be missing opportunities due to those two buckets, just look at the other aspect of the keyword count.

First, by cleaning up and refining the topical focus on the 100 most important pages and instilling proper SEO for Content Writing into the client mindset, we’ve taken the long tail to a whole new level, a level that people may have difficulty comprehending when I talk about REDUCING the number of keywords on a page.

Yet it’s that reduction and refinement, coupled with truly high-quality content writing that is GEARED TOWARD THE SITE VISITOR that allows search engines to actually finally do a really good job of figuring out that all the variations for each page and each section of the site really do match up from a user intent perspective.

If you were to unleash the “Other” and “Not Provided” phrases into the total count, you’d see that the site now generates visits via more than a million different phrases over the past six-month period. A MILLION PHRASES. For all the potential opportunities in “Not Provided,” how much time would YOU be able to spend analyzing over a million phrase variations looking for a few golden nuggets?

Personally, my client and I are quite content with not being able to dig into that specific metric in this case. So while it’s a bigger challenge for much smaller sites, when you’re at this level, I’m more concerned that Google says, “You have so much data we’re just going to group hundreds of thousands of phrases into ‘Other’.”